Welcome to the Matrix!

EU AI Act Risk Master Class: Understanding EU AI Act risk classification

Background and context

You may think you know all about EU AI Act risk classification because the web is awash with pictures and posts about prohibited-risk, high-risk, limited-risk and minimal-risk.

But if you work with compliance, risk management, AI operations, AI-governance or AI system implementation, you know far less than you think, because there are more than four risk classification categories. This post identifies them, explains why this is so and introduces you to the EU AI Act Risk Classification Matrix.

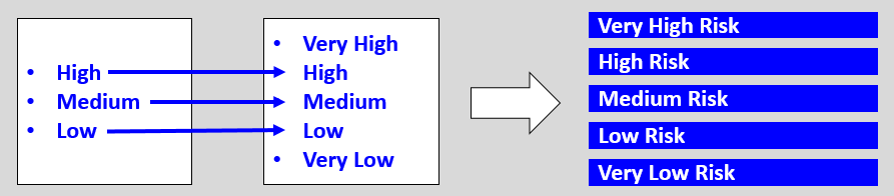

A brief history of risk classification

In the old days, before the EU AI Act was invented, risks had three main categories: high, medium and low. Occasionally a risk manager would extend this model and use five categories, introducing very high risk and very low risk in the process. This led to the Standard Risk Classification Model, which is visualized below.

A brief history of EU risk management

Politicians were invented long before the EU was invented, but we do not know what came first; politicians or risks. What we do know is that with the EU came EU politicians. With EU politicians came EU bureaucrats and global lobbyists. Politicians knew little about risk, and only cared about it when it was reputational. Bureaucrats knew enough about risk to avoid risk managers who wanted to expose it. But lobbyists were risk-savvy and experts at managing emergent risks.

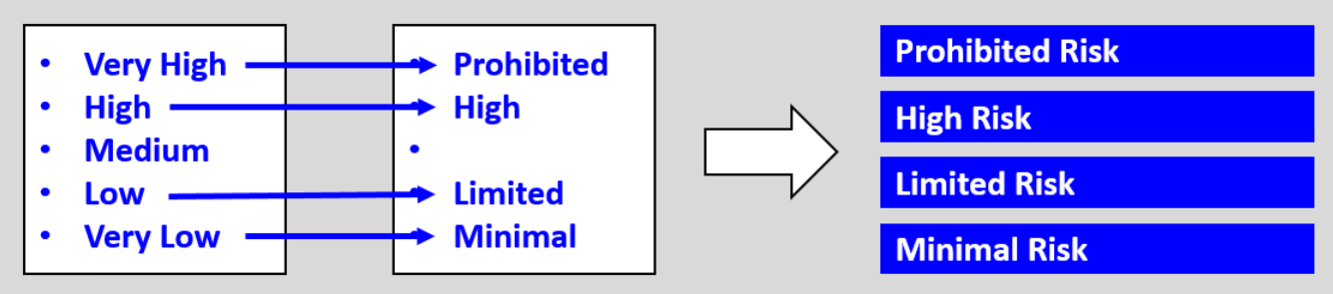

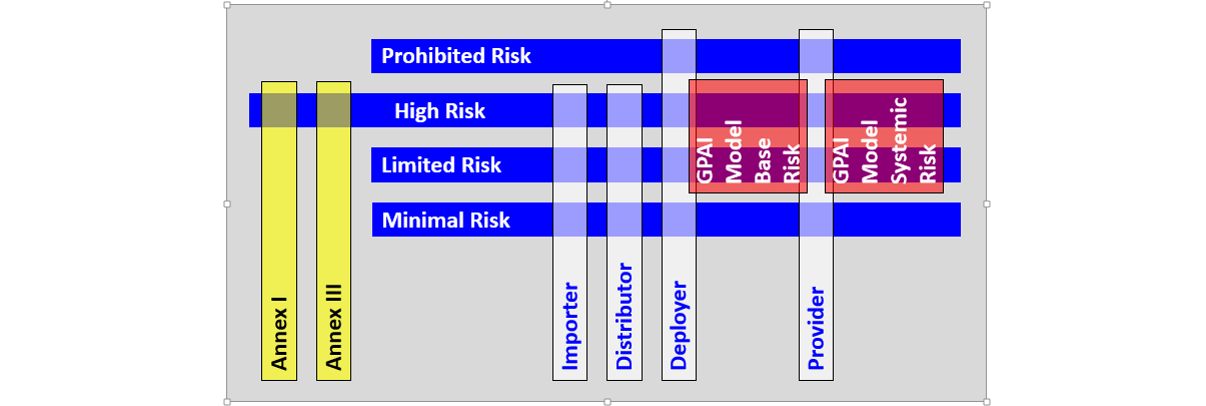

To be fair, this was before the war, and now to their credit, risk management has moved to where it belongs; at the top of our agenda. But the EU AI Act was designed before this happened, which brings us to what they did in the beginning. This is visualized in the first dimension of the EU AI Act Risk Classification Matrix in blue below.

The mapping is so simple that it does not need an explanation. Most politicians and bureaucrats reading this are thinking: wow, one out of five is a great score! What they should be thinking is whether they should replace their risk management consultants and advisors with LLMs.

Most people think they're done with risk classification at this stage and stop here, but this is a master class, and as you will see there is much more to EU AI Act risk classification than a single dimension with four categories.

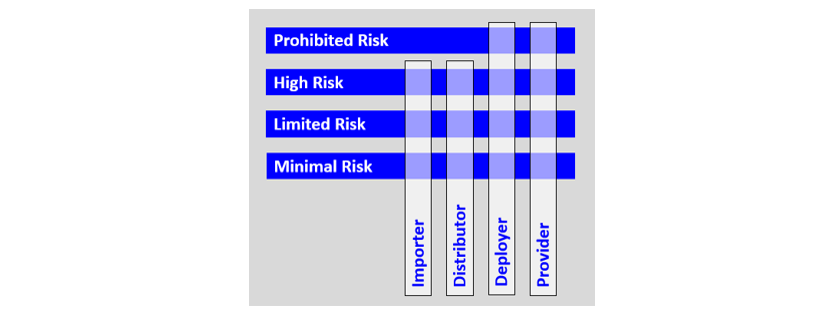

The second dimension of the Risk Classification Matrix

The second dimension is visualized in white above and should not come as a surprise to any of you. If it is a surprise, read my post about identity management. The key lesson for those of you working with high risk is that Provider high-risk requirements are not the same as say, Deployer high-risk requirements or Importer high-risk requirements.

The EU AI Act Risk Classification Matrix has more than two dimensions, but before we introduce the others, we need another history lesson.

A brief history of GPAI and GPAI risk

The EU AI Act did not know LLMs existed before ChatGPT3 fell down from the clouds and took the world by storm. LLMs were initially referred to as Foundation Models, before the EU AI Act settled for the current term, General Purpose AI Models, which include LLMs and Generative AI models. We refer to this as GPAI to reduce our carbon footprint.

The easiest, simplest and most efficient way of addressing this issue, would have been by using industry terms like LLM or Generative AI. That way everyone would know what they were talking about. But lobbyists convinced our beloved politicians and service-minded bureaucrats to redefine terms and create new definitions, and muddy the waters in the process. Their motivation is simple and straightforward. GPAI providers do not want regulation and are incentivized to create as broad a definition as possible, ideally encompassing most AI systems, because they know that the EU does not want to - and will not - impose harsh requirements on the majority of AI systems.

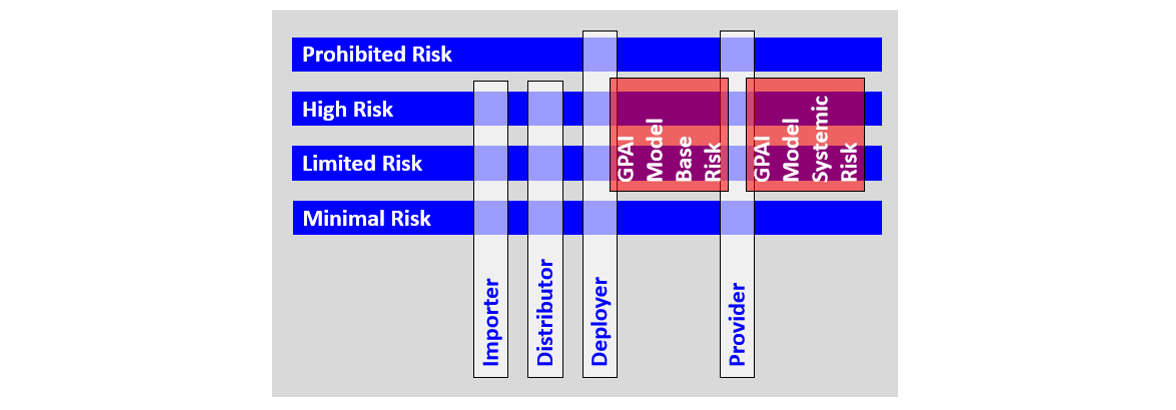

The third dimension of the Risk Classification Matrix

After LLMs were discovered, it was evident that they posed a very high risk, and indeed, LLMs should have been classified as very high-risk. But lobbying put an end to that, and risk managers do not appear to have been consulted, except by lobbyists. This led to a decision to introduce a new risk classification dimension. This dimension addresses GPAI and introduces two new risk classification categories:

GPAI Model Systemic Risk, which indicates that the GPAI model in an AI system poses a systemic risk.

GPAI Model Base Risk, which indicates that the GPAI model in an AI system poses a foundational level of risk.

The third dimension of the EU AI Act Risk Classification Matrix is visualized in red below.

The fourth dimension of the Risk Classification Matrix

The fourth dimension of the EU AI Act Risk Classification Matrix introduces two high risk classification categories:

Annex I

Annex III

This dimension is visualized in yellow below.

The key lesson is that Provider Annex I high-risk requirements are not the same as Provider Annex III high-risk requirements. The simple explanation for this dimension is that some high risks are higher than the others. The detailed explanation needs one final history lesson.

A brief history of EU annexation

You don’t have to be a Russian or Brexit supporter to think that annexation is a formal act whereby the EU proclaims its sovereignty over territory hitherto outside its domain. But you must disregard this definition because it’s out of context. In the current context an annex is an extra or subordinate part of the main EU AI Act document, and annexation is simply a process whereby an EU bureaucrat creates an EU AI Act annex.

The Annex I and Annex III risk classification categories, were driven by regulatory intelligence and performance incentives. Intelligent regulators figured out that certain pre-existing regulations, which are referred to in Annex I, already placed significant emphasis on risk management. They decided that if high-risk AI systems were already managed according to these Annex I regulations, it made more sense for the delta AI high-risk to be managed within existing regulatory scope and risk management systems. And so it came to be.

In the initial version of the EU AI Act, high-risk AI systems which were not regulated by Annex I regulation were referred to as Annex II. And it would have remained Annex II, had it not been for intelligent bureaucrats, who figured out that their bonuses were driven by the number of annexes they created. And so it came to be that they copied and pasted Annex II into Annex III.

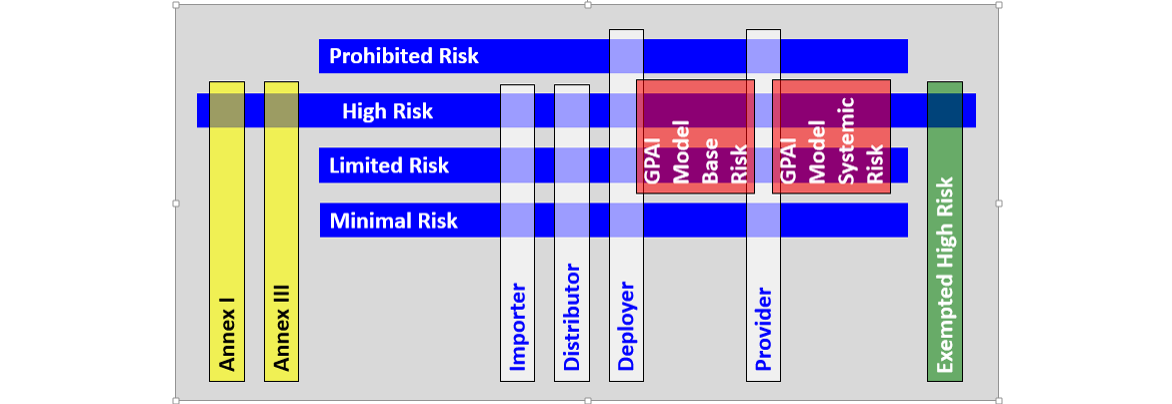

Introducing the EU AI Act Risk Classification Matrix

When you are a risk manager, you do not need more than two dimensions to classify risk. But when you are a bureaucrat you think: why stop with four dimensions, when you can have five?

This brings us to the EU AI Act Risk Classification Matrix with five dimensions. It is visualized below in its full splendor, with the fifth dimension in green. No prize for guessing what the fifth dimension is about. The clue is in the name!

That is a beautiful and clear explanation. Thanks for that!